60s

60s

An interactive music system

Fall 2017

Introduction

Computer technology provides enormous possibilities in music performance and production. It provides the possibility to design instruments with creative metaphor and many-to-many mapping which will results spectacular performance and infinite acoustic results. “60s” is my take on many-to-many mapping interactive music system design. The concept of this instrument coming from two aspect: creative gesture mapping with an intuitive visual presentation and creating tension and feedback between the user and the instrument.

Gesture Mapping: Supernatural Magic

Gesture mapping is one of the most challenging part in the many-to-many mapping system design. It is hard to take the advantage of complex mapping and yet it intuitive and transparent. The gesture mapping is inspired by the concept of “creating music like a wizard using magic wand.” Technology such as leap motion, Microsoft Kinect combined with machine learning classification can recognize people’s gestures fairly accurately. These benefits of the technology will truly bring the astonishing effects to the music performance. These touchless input methods will provide an illusion for the user and the audience of an illusion of supernatural. However, the gesture mapping should also take into an account that it should be still keep some degree of transparency. To have a good design, the system should be seen “magical” at first glance but as long as the audience been told the theory behind it, it should also be easy to get in hand. But the transparency should not also compensate for the depth of the system.

Tension between Performer and Instrument

I believe creating a tension between the instruments and the performer will push the performer’s limits and creating music full of energy. For example, many musicians rent studio for just a week for recording an album. The limit of time spurs their creativity and productivity. The guitar player from the band the White Stripes once mentioned in his interview that he will put all his instrument relatively far away on the stage so that he has to perform an improvisation before he can move to another instrument. The intentional discomfort will stimulate the performance experience and the musician’s musical limits. Therefore, I believe intentional design a music instrument that somehow “discomfort” the musician in order to inspire the performer is an experimental but reasonable concept.

Control Structure

The system consists of three sound modules. Each one takes different inputs. The leading sound of the system is a computer vision mapping system by using the input from a webcam. The webcam is placed on the desk so that the performer can moving their hands or any object they are holding to trigger the notes playing. The second sound engine is the drone sound in the back ground. It makes constant haunting like sound and the user can use their hand gesture performing holding or releasing a crystal ball to change the center frequency of the low pass filter. The hand gesture data is collected from the leap motion sensor. The third part is the key of the system; it only has one input: a click. This sound engine is based on the idea: Micro-polyphony by the Hungarian composer György Ligeti. It is defined as a music texture which consists of many lines of dense canons moving at different tempos or rhythms to creating a cluster, haunting like sound. Apothecia, a psychology phenomenon that people will tends to searching for order in total chaos, will be created by using the technique of micro-polyphony. The apothecia create a disharmony, unpleasing feeling. In this system, once the click has been pushed, every 10s the system will trigger a midi sequence. There are 5 midi sequence in total. Each midi sequence has the same scale, same phrase but play with different tempo. With time passed by, layers and layers of midi-sequence will be overlapped, creating a clustering disharmony. When 60s seconds has been reaches, the disharmony will take over the entire the system. Therefore, the user must fight against the system – creating music is a limited time and the disharmony pressing feeling will create a tension between the performer and the instrument.

Wand and Hand Control Demo

Magical Sphere Drone Sound Demo

Implementation: Leading Sound Algorithm

The leading sound is taking inputs from a web cam placing on the desk. The reason I choose webcam instead of other input device such as leap motion is because I used leap motion to recognize the hand gestures for the drone module. Leap motion is a IR camera which detach the temperature of the objects. Thus, it cannot detect non-human objects. Using a wand on one hand and using the other hand to control the other sound module with two different kind of input device will reduce the noise and intervention thus to make sure high performance experience. Webcam video was divided into 10 * 9 boxes, each box contributes to a midi note. Each small video box will calculate the difference with last state and difference will be presented as white. The whiter the box has, the faster the object is moving and it is mapped to the velocity of midi note. Therefore, the controllability of the velocity will bring the organic feeling for the system. The midi note is sent Live and the audio synthesis is done in Live. The midi notes are mapped to C major pentatonic scales so that in leading sound module everything will sounds harmonic. Thus, even for a user without any background, they could play the instrument without any issue. On the other hand, the micro level controllability such as the velocity controller bring up the high ceiling of the system so that the system could be interesting to use over an extended period of time.

Drone Module Signal Flow

Implementation: Drone Sound Algorithm

The sound design of the drone sound is made in BEAP object with a traditional subtractive synthesizer signal flow. The heavy feedback reverb is added at the last stage of the signal flow to creating the drone feeling. The drone sound is filtered by a low pass filter and the center frequency could be could be manipulated by hand gestures. The hand position data is collected by the leap motion and the classification is done by Wekinator. The overall panning of the system can also be controlled by the hand position.

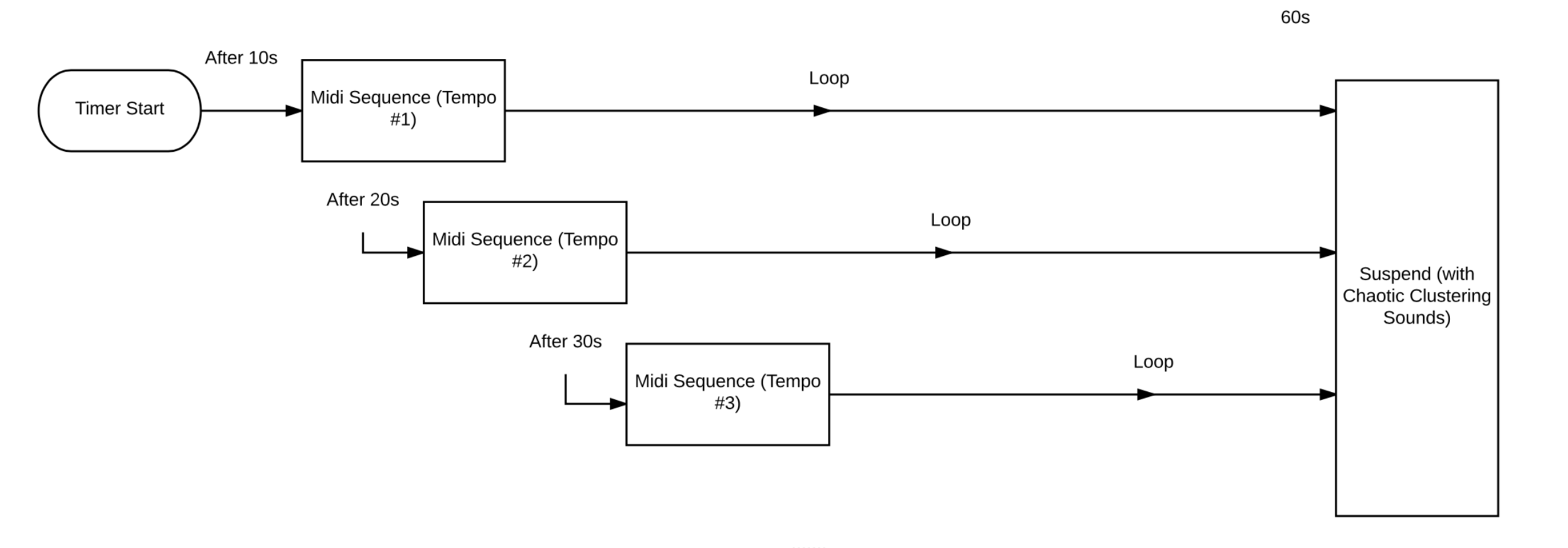

Implementation: “60” Algorithm

There is a timer in this module. Every 10s it will trigger a midi sequence. There are 5 midi sequence in total. Each midi sequence has the same note, same phrase but play with different tempo. The MIDI notes then sent to Live and the sound design is did in live. Once the timer reach 50s, the sound will go complete chaotic and when the timer reach 60s the sound will stop suddenly. The user could start new session if intended.

“60s” Micro-polyphony Module Algorithm Flow Chart

Visual Presentation

The particle cluster is designed based on Federico Foderaro’s patch. The color tracking is used in this section; the wand is panted with green and the center location on the camera will be the center of the cluster. The user can move the wand left and right to create “magic spell”. Also with each 10s adds on, the attractivity of the center of the cluster will decrease, with t=50s the particles will start to do random motion.

Challenges

As the project’s main inspiration on gesture mapping is “Magic’, it is supposed to be mysterious to creating the astonishing performance experience. However, at the same time the transparency should not be compensated. The solution is to have well detailed tutorials so that once figure out, the system is easy to learn. As I experienced during the project demonstration, with explanation, people get familiar and enjoy the instrument quickly. Therefore, the challenge is solved at the end.

Low floor/High Ceiling Design

Since the midi notes in the lead vocal (wind bell sounds) are mapped to C major pentatonic scales so this module everything will sounds harmonic. Therefore, even for a user without any background, they could play the instrument without any issue. On the other hand, the micro level controllability such as the velocity controller bring up the high ceiling of the system so that the system could be interesting to use over an extended period of time. As the system is also help to create a tension between instrument and user, it aims to push the limits of professional musicians’ skills. Therefore, the system has a low floor and a high ceiling.

Reliability and Future Works

Sometimes the classification of Wekinator will slow down the system (this is also appear in the Demo video down below, I should record a new video without slow down visual presentation recently). In future, a better machine learning classification method should be used to extend the reliability of the system.